If you’re a fan of movie techniques you’ll surely know what a dolly zoom is. But for those who don’t know, it’s an effect where the subject stays the same size but the background looks like you’re zooming in and out. The name comes from the fact that you zoom your camera lens while moving the camera, keeping the field of view constant at the subject. And while it can be subtle, it can also be a fun way to make exciting footage for your video creations. So I wondered how easy it would be to replicate the effect in Blender’s Python scripting interface.

Turns out it’s not difficult at all. However, it took a bit of Math to come to that conclusion – I’ve included that here so you can have the complete picture, but feel free to skip ahead if math freaks you out. To make things simple I based my calculations on the assumptions in the diagram below. Basically, Sd is the distance to the subject – but I decided to keep things simple by having the subject at the origin of the Y-axis, making Sd also the Y-axis position for the camera. Sj is then the height of the subject (divided by two for easy trigonometry) and Sz is the virtual-sensor size (divided by two again) in Blender, with Fl being the camera’s focal length. You can see these details in Blender’s camera settings tab.

The main thing to note is that we can calculate the field-of-view (FV) from the triangles either side of the lens position. And looking closely we also see that the size of the triangles either side of the lens must therefore also be proportional. You can see that in the simple equations below: as the sensor and subject sizes don’t change any changes in the subject distance require a corresponding change in the focal length. Basically all that means is that if you double the camera distance (i.e. Sd) you just need to double the focal length (Fl) too.

So I wrote some simple Blender BPY Python code to calculate focal lengths for all elements in a pre-planned list of camera distances, together with code to move the camera through them and render the results to image files. But before I go through the code I’ll go through how to use it for anyone with an aversion to how code works. So if you want to have a go you can download the Blender file, together with required image files and the Python script, over at the Parth3D experiments Github repository. Simply download the files into a single folder and you’re ready to go.

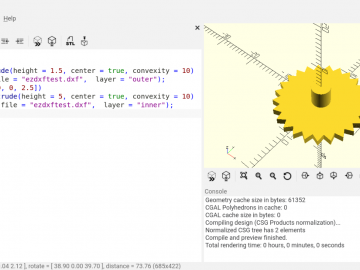

Now you need to open the Blender file and go to the scripting tab. Then open the Python script and you should have a Blender window that looks something like the screenshot below. And so you can see the text outputs (which will help you know when the script ends) make sure Blender was opened either from a terminal (Linux), or open the command console from the ‘Window menu’ (Windows) by selecting Toggle System Console. Then click the run triangle icon to start rendering.

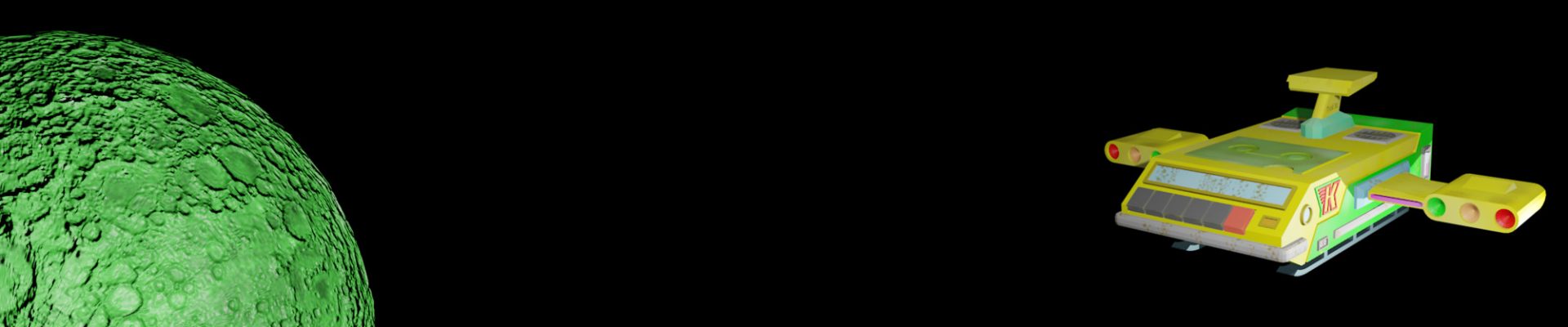

If you have a look in your folder you’ll see that a ‘render’ folder has been created and is filling up with image files. In total there will be 300 of them if you haven’t changed the Python code. Each one is named in a specific way, like ‘frame-000009.png’, to ensure we know how they’re ordered – that’s important because we have to stick them all together into a movie file. You can see some of the frames below, from the start, middle and end of the rendering. Apart from some distortion with the very wide focal lengths (see e.g. left frame below) you can see that the witch stays the same size while the background looks like it’s being zoomed.

I did the stitching to video using FFMPEG using the command below, from the same folder that the Blender file was located in. I ran it on Windows, but you should hopefully be able to do much the same thing on other operating systems.

ffmpeg.exe -framerate 30 -i ".\renders\frame-%%06d.png" -c:v libx264 -pix_fmt yuv420p movieout.mp4If you look in your Blender files’ folder again you should now see an MP4 file named ‘movieout.mp4’, just like the video I embedded below. Play it to bask in the glory of having created the famous dolly zoom effect in Python – well, for around ten seconds anyway. And if you want to know how the code works you can read on below. Or, if you don’t, you can still adapt the code by changing the obviously named key variables.

So the code itself is really quite short – as I said earlier, it’s actually a deceptively easy effect to recreate using Blender and Python. And I won’t cover everything in the script so you need to refer to the downloaded ‘bpydollyzoom.py’ file. And the central part of that code calculates a list of camera distances between a start distance (sd) and an end one (ed) to be used over a number of frames (numfr) which determines the number of elements in the returned list. The version below creates distances such that the camera movement is slower near the start and end, which arguably will look better to viewers (there’s a linear version in the script too, if you fancy a play).

def make_sin_dist_list(sd, ed, numfr):

dl = []

for c in range(0, numfr):

ang = (c / (numfr - 1)) * 2 * math.pi

dd = 1 - ((math.sin(ang + (math.pi / 2)) * 0.5) + 0.5)

dist = ((ed - sd) * dd) + sd

dl.append(dist)

return dlWe can now create a list containing the distances (passed in as dl) and corresponding focal lengths, using the code below and initialisation values for the camera distance (icd) and focal length (ifl) – we’ll see what they are shortly. Looking closely you can see that the code simply works on our rule that doubling the camera distance doubles the focal length – nothing more complicated is needed.

def make_dfl_list(dl, icd, ifl):

dfl = []

for c in range(0, len(dl)):

fd = dl[c]

fl = (fd / icd) * ifl

dfl.append([fd, fl])

return dflNow we’ll do some BPY (Blender Python) magic to find the camera and get some information about it. The last two lines below retrieve the camera Y-axis position and focal length – which you’ll have noticed are actually the two initialisation values mentioned above. Basically I set the camera up for a good static render in Blender, so it should also allow for a good set of dolly zoom render images.

bpy.ops.object.mode_set(mode='OBJECT')

objs = bpy.data.objects

cam = objs['Camera_MONO']

cpy = cam.location[1]

sfl = cam.data.lensNext we define some variables that control the dollyzoom effect. The value of dollystart is the initial position of the camera along the Y-axis for dolly movement, and dollylength is the distance over which we will move the camera. Frames per dolly will be used to ensure we have enough render images to give 30 frames in and 30 out – i.e. 60 frames in total which, at 30 frames per second, gives two seconds of video between the start and end of the dollyzoom (each from the closest Y-axis point, to the farthest, then back to the closest). And we will get the code to repeat the renders 5 times to give a total video duration of 10 seconds.

dollystart = 2

dollylength = 30

framesperdolly = 60

framerepeats = 5You can easily adjust those values for a bit of fun, or even change the camera distance list for different effects. And then you can use the code below to create the distance and focal length lists.

dl = make_sin_dist_list(dollystart, dollystart + dollylength, framesperdolly)

dfl = make_dfl_list(dl, abs(cpy), sfl)I won’t cover the actual rendering here in any depth as it’s pretty standard Python code. However, for completeness, I’ve put it below. Basically it just iterates over the distance and focal length values, rendering an image for each element in our list.

renderto = os.path.join(bpy.path.abspath("//"), "renders")

framenum = 0

for fr in range(0, framerepeats):

for c in range(0, framesperdolly):

renderpath = os.path.join(renderto, "frame-" + format(framenum, '06d') + ".png")

print("Rendering frame " + str(framenum + 1) + " of " + str(framesperdolly * framerepeats))

bpy.context.scene.render.filepath = renderpath

cp, fl = dfl[c]

cam.location[1] = -cp

cam.data.lens = fl

bpy.ops.render.render(write_still = True)

framenum = framenum + 1Finally, the code sets the camera Y-axis location and focal length to the initial values, so our Blender file remains as it was before we ran the script.

cam.location[1] = cpy

cam.data.lens = sflAnd that’s it, we now have a dollyzoom script we can use to compete with blockbuster movies like Vertigo and Jaws, to name just a couple of many. I hope you enjoy playing around with it 🙂