As well as 3D imaging and designing, my passions also include computer programming. When you think about it, that’s an important combination of interests for anyone who likes 3D, especially as many ways of 3D designing are either made better with, or adamently require, knowledge of computer languages. OpenSCAD, for example, requires knowledge of a C-like language. And Blender, with its scripting tools, lets you do things in Python that you might never imagine otherwise. So, having bought a Samsung S20 Android phone in the first half of 2021, I decided to put my knowledge to the test by writing an app to access data from the Time-of-Flight depth camera.

So I wrote a small test app, based on some initial code I found on the excellent Github repository of Luke Ma. It was based on getting a video feed, and wasn’t written for compatibility with all Camera2 API devices, so I changed it for better compatibility, and to get individual frames for use in a simple camera interface. Then I made some Camera2 compatibility improvements, and some code to allow averaging of depth (and depth-confidence) data over a few frames, to reduce noise. And finally I added code to let me get metadata about the camera including pixel size, physical size and focal length: all things essential for converting the data to x, y and z coordinates as point clouds.

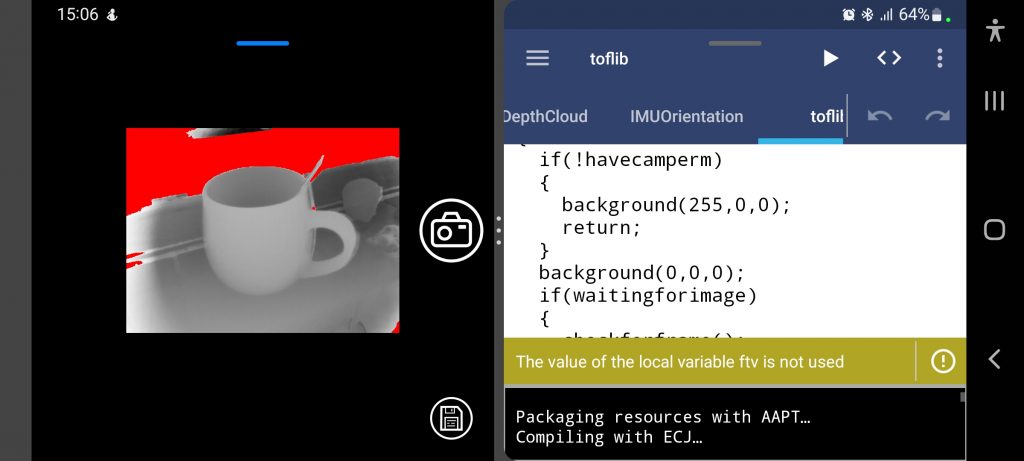

I wrote that app in AIDE, which is an excellent Android IDE for speedy app prototyping on a phone or tablet. It worked well, but really I didn’t want to create a whole new app every time I wanted something different: basically I wanted a more maker-friendly version. So I decided to port the code to work with another excellent Android app, Callsign’s APDE, which allows you to write programs in Processing on your Android device, and even run them with a single click. The result is what you see in the Android screenshot below, from my Samsung S20 phone.

It turned out that simply transferring the class to an APDE app would not work. After a lot of exploring, and web searching, I realised the problem is that using the camera code as a class in APDE means the Camera2 API functions don’t have a way to pass messages using Java callbacks. In AIDE that didn’t matter because Camera2 defaults to using the looper in the main activity, which isn’t available in the fragment code that APDE uses to run the app. So I made quite a few changes to the camera code, mostly to implement callbacks that bypassed the need to have access to the main activity, which I think is a better way of doing it anyway. And if that means nothing to you worry not, the image below shows that it worked.

Having written code previously to process Intel Realsense depth camera data, I decided to port some of it to this project, and add some new improvements. So, the Github repository for my code has files that can be used with Processing, Python 3 and web pages using Javascript. The Python 3 code was mostly written and tested using the excellent PyDroid3 Android app, so you can use it on your PC, phone or tablet. And the Javascript code was written using P5.js, to continue the Processing theme, using the Replit web-based development platform. All of the different code versions allow loading of depth data, depth-confidence data and metadata, allowing files saved from the APDE camera code to be used in a myriad of maker-friendly ways.

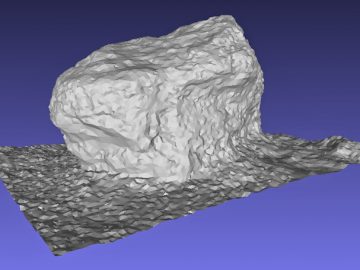

To give you an idea of what the project is all about, below is the P5.js Javascript example embedded as a REPL from ReplIt. It shows a point cloud of my 2×2 Rubiks Cube imaged from above. You can drag your mouse, or finger, over it to change the view. And, the slider below the point cloud (scroll down if you can’t see it) lets you change the minimum depth-confidence a point needs in order to be displayed, allowing you to explore how it affects the 3D data.

Finally, if you want to use the code yourself, with your Camera2-compliant depth camera and phone, please head to the Github repository to download or fork it.