Here’s something that you might not know: some Google Pixel Android phones have an option in the Pixel Camera app to turn on ‘social media depth features‘. Sounds exciting doesn’t it, and I bet some people who find it buried in the settings can be forgiven for thinking that it’s useful when you’re updating your feeds from the bottom of interesting holes in the ground. But no, it actually means that photos are augmented with an embedded depth map image, created using AI and/or multiple lenses, that we can use to make stereo 3D photos in Python 3! Below you can see the option in Pixel Camera on a Pixel 8 Pro phone: easily found if you look in the options pop-up, choose more settings, then go to advanced settings.

If you’ve read any of my posts about the Python 3 Photos3D library you’ll know that we can use it to do things like splitting multiple images from container photos, and making stereo side-by-side photos using depth maps. So I’ve added an example of using it with SM depth enabled photos on the Github repo, which you can download here to make life easy. You can just run the pixelsmdepth.py example straight away if you feel confident, but below I’ll go through the code and give some explanations that hopefully will make all seem plenty clear.

If you open the example in your favourite text editor you’ll see it starts with some preliminaries. Firstly it imports some standard Python libraries used for data processing and plotting. Then it imports three of the Photos3D modules central to what we need to do. The jpegtool module is used to analyse the photo file and split out the embedded images, the image module is used to do general image operations, and the depthmaps module is used to create the stereo image.

import os

import sys

from PIL import Image

from io import BytesIO

from matplotlib import pyplot as plt

from photos3d import jpegtool as jpg

from photos3d import image as img

from photos3d import depthmaps as dm

fname = "pixelsmdepthportrait.jpg"

fn = os.path.join(".", "testimages", fname)The photo we’ll be using the code with, that’s included at the end of the above code snippet, looks like the image below. You can find it named pixelsmdepthportrait.jpg in the testimages folder and when you open it you’ll see no sign that it has other images embedded. Importantly, it was taken in the Portrait Mode in Pixel Camera on a Pixel 8 Pro phone – you can still get a depth map in normal Photo Mode if you want a wider angle photo, but you’ll likely find it’s smaller and lower quality. Of course, that may vary between different Google Pixel phones, and whether you can do something similar with other phone cameras is something that’ll need a little experimentation.

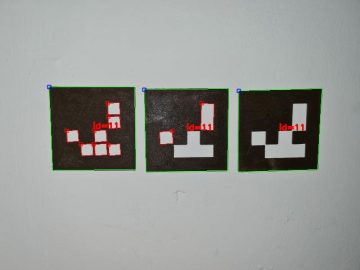

The next step in the code is finding all the section markers in the JPEG photo file (see my previous post about JPEG structures) using the jpegtool module’s find.markers function to get a list of them. The split_images function then uses the list to work out where individual images start and end, rearranging the list to reflect that container structure. We then define the index in that list where we should find the depth map image, which for my Pixel 8 Pro turned out to be 4. If that index value doesn’t work for you, some trial and error may be needed – but it may help that I found a list of the embedded images in JPEG Marker 3 of my photo, which the jpegtool module can print for you.

flen, markers = jpg.find_markers(fn)

ims = jpg.split_images(markers)

depthindex = 4

if len(ims) < (depthindex + 1):

print()

print("Not enough embedded images!")

print("Perhaps SM Depth was not enabled?")

print("Perhaps you need a different depth map index?")

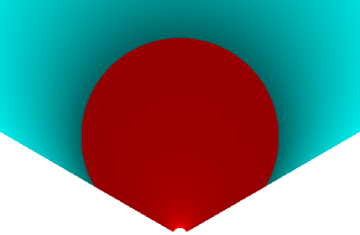

sys.exit()Now we have a list of where all the images are in the file, we can get to work extracting the embedded images using the Pillow Image module and Bytes IO library. First we extract the main photo, which should always be at index zero. Then we extract the depth map – note we need it to be in an RGB format, not the 8-bit format we get from the photo file. Also, the depth map turned out to be black at close distances, and white farther away, so I needed to make a negative version of it to get correct stereo output later.

st = ims[0][0][1]

en = ims[0][-1][1] + ims[0][-1][2]

dl = en - st

fdata = jpg.read_file_data(fn, st, dl)

rgb = Image.open(BytesIO(fdata))

st = ims[depthindex][0][1]

en = ims[depthindex][-1][1] + ims[depthindex][-1][2]

dl = en - st

fdata = jpg.read_file_data(fn, st, dl)

depth = Image.open(BytesIO(fdata)).convert('RGB')

depth = img.negative(depth)So with that done we now need to do some resizing. Firstly, the code will halve the dimensions of the main RGB photo image. That’s only because the code won’t be anything like quick, as we code in pure Python, so this will speed the example up quite a bit – but for most uses you’ll probably want to omit this to preserve the full image resolution. Following that we resize the depth map image so it’s the same size as the RGB one.

nw, nh = img.size(rgb)

rgb = img.resize(int(nw/2), int(nh/2), rgb)

# Make RGB and depth map images the same size

nw, nh = img.size(rgb)

depth = img.resize(nw, nh, depth)At this stage we now have an RGB image and a depth map (which we could just save for later 3D photo processing if we preferred). So creating a stereo side-by-side image is pretty much as described in my previous post about converting RGBD files to stereo. In fact, the code below is lifted straight from that post, except that I’ve reduced the strength of the disparity to low. There’s a good reason for that, and it relates to the fact that a depth map can’t tell you what’s out of sight in the same way as taking two photos, from different positions, can. And for different photos you may want to play with the near, middle and far options to get the best results.

darr = dm.depth_image_to_array(depth)

dispmin, dispmax = dm.estimate_disparity(rgb, strength='low', converge='near')

disps = dm.depth_array_to_disparity(darr, mindisp=dispmin, maxdisp=dispmax)

sbs = dm.depth_to_stereo(rgb, disps, darr)

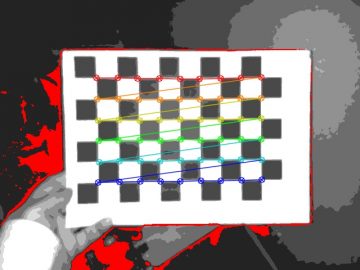

sbs.save('sbs.jpg')And with all that over we can now add a final bit of code to display the images in matplotlib. It includes three subplots: the main RGB, depthmap and side-by-side images. In case you want to know, the depth map is plotted with a ‘gray’ colour map because otherwise pyplot.imshow draws it in some funky colours even though it’s a monochrome image.

fig=plt.figure()

fig.add_subplot(2,2,1)

plt.imshow(rgb)

plt.title("RGB image")

fig.add_subplot(2,2,2)

plt.imshow(depth, cmap='gray')

plt.title("Depth image")

fig.add_subplot(2,1,2)

plt.imshow(sbs)

plt.title("SBS stereo output")

plt.tight_layout()

plt.show()Now we can run our Python code to see what it pops out: if you’re at the commandline something like ‘python pixelsmdepth.py’ should do the trick. The output on Pythonista on my iPad is shown below, with the main RGB image, the extracted depth map image, and the generated side-by-side stereo image.

And there we have it! And, OK, it may not be all that fast doing this in pure Python, but at least it gives us a good starting point for future attempts at making 3D stereo photos from Android phone images 🙂